Social Signals

12 new KPIs for the generative AI search era

SEO teams have built dashboards around a shared set of familiar metrics for over two decades: clicks, rankings, impressions, bounce rate, link volume, etc. These KPIs powered strategies, reports, and… promotions.

But what happens when the interface between your audience and your brand no longer involves a search result page?

As search fragments into AI chat interfaces, smart assistants, and zero-click responses, a seismic shift is underway. The old KPIs – built for blue links and browser sessions – are becoming relics. And while many still have value, a new class of performance signals is emerging that better aligns with how information is retrieved, ranked, and presented by modern AI systems.

This isn’t just a retooling of analytics. It’s a redefinition of what “visibility” and “authority” mean in a search environment dominated by retrieval-augmented generation (RAG), embeddings, vector databases, and large language models.

It’s time to start tracking what will actually matter tomorrow, not just what used to matter yesterday.

The old SEO dashboard: Familiar, but fading

Traditional SEO metrics evolved alongside the SERP. They reflected performance in a world where every search led to a list of 10 blue links and the goal was to be one of them. Common KPIs included:

- Organic sessions

- Click-through rate (CTR)

- Average position

- Bounce rate & time on site

- Pages per session

- Backlink count

- Domain Authority (DA)*

*A proprietary metric from Moz, often used as shorthand for domain strength, though never a formal search engine signal.

These metrics were useful, especially for campaign performance or benchmarking. But they all had one thing in common: they were optimized for human users navigating Google’s interface, not machine agents or AI models working in the background.

What changed: A new stack for search

We’ve entered the era of AI-mediated search. Instead of browsing results, users now ask questions and receive synthesized answers from platforms like ChatGPT, CoPilot, Gemini, and Perplexity. Under the hood, those answers are powered by an entirely new stack:

- Vector databases

- Embeddings

- BM25 + RRF ensemble re-rankers

- LLMs (like GPT-4, Claude, Gemini)

- Agents and plugins running AI-assisted tasks

In this environment, your content isn’t “ranked” – it’s retrieved, reasoned over, and maybe (if you’re lucky) cited.

12 emerging KPIs for the generative AI search era (with naming logic)

Please keep in mind, these are simply my ideas. A starting point. Agree or don’t. Use them or don’t. Entirely up to you. But if all this does is start people thinking and talking in this new direction, it was worth the work to create it.

1. Chunk retrieval frequency

- How often a modular content block is retrieved in response to prompts.

- Why we call it that: “Chunks” reflect how RAG systems segment content, and “retrieval frequency” quantifies LLM visibility.

2. Embedding relevance score

- Similarity score between query and content embeddings.

- Why we call it that: Rooted in vector math; this reflects alignment with search intent.

3. Attribution rate in AI outputs

- How often your brand/site is cited in AI answers.

- Why we call it that: Based on attribution in journalism and analytics, now adapted for AI.

4. AI citation count

- Total references to your content across LLMs.

- Why we call it that: Borrowed from academia. Citations = trust in AI environments.

5. Vector index presence rate

- The % of your content successfully indexed into vector stores.

- Why we call it that: Merges SEO’s “index coverage” with vector DB logic.

6. Retrieval confidence score

- The model’s likelihood estimation when selecting your chunk.

- Why we call it that: Based on probabilistic scoring used in model decisions.

7. RRF Rank Contribution

- How much your chunk influences final re-ranked results.

- Why we call it that: Pulled directly from Reciprocal Rank Fusion models.

8. LLM answer coverage

- The number of distinct prompts your content helps resolve.

- Why we call it that: “Coverage” is a planning metric now adapted for LLM utility.

9. AI model crawl success rate

- How much of your site AI bots can successfully ingest.

- Why we call it that: A fresh spin on classic crawl diagnostics, applied to GPTBot et al.

10. Semantic density score

- The richness of meaning, relationships, and facts per chunk.

- Why we call it that: Inspired by academic “semantic density” and adapted for AI retrieval.

11. Zero-click surface presence

- Your appearance in systems that don’t require links to deliver answers.

- Why we call it that: “Zero-click” meets “surface visibility” — tracking exposure, not traffic.

12. Machine-validated authority

- A measure of authority as judged by machines, not links.

- Why we call it that: We’re reframing traditional “authority” for the LLM era.

Visualizing KPI change and workflow positioning

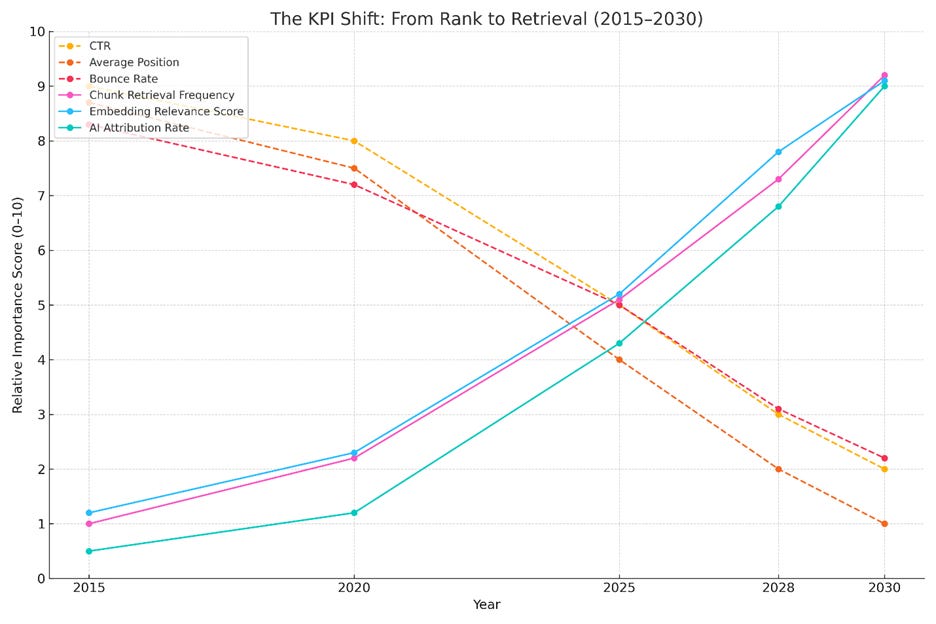

This first chart visualizes the evolving importance of performance metrics in search and discovery environments from 2015 through 2030. Traditional SEO KPIs such as click-through rate (CTR), average position, and bounce rate steadily decline as their relevance diminishes in the face of AI-driven discovery systems.

In parallel, AI-native KPIs like chunk retrieval frequency, embedding relevance score, and AI attribution rate show a sharp rise, reflecting the growing influence of vector databases, LLMs, and retrieval-augmented generation (RAG). The crossover point around 2025–2026 highlights the current inflection in how performance is measured, with AI-mediated systems beginning to eclipse traditional ranking-based models.

The projections through 2030 reinforce that while legacy metrics may never fully disappear, they are being gradually overtaken by retrieval- and reasoning-based signals – making now the time to start tracking what truly matters.

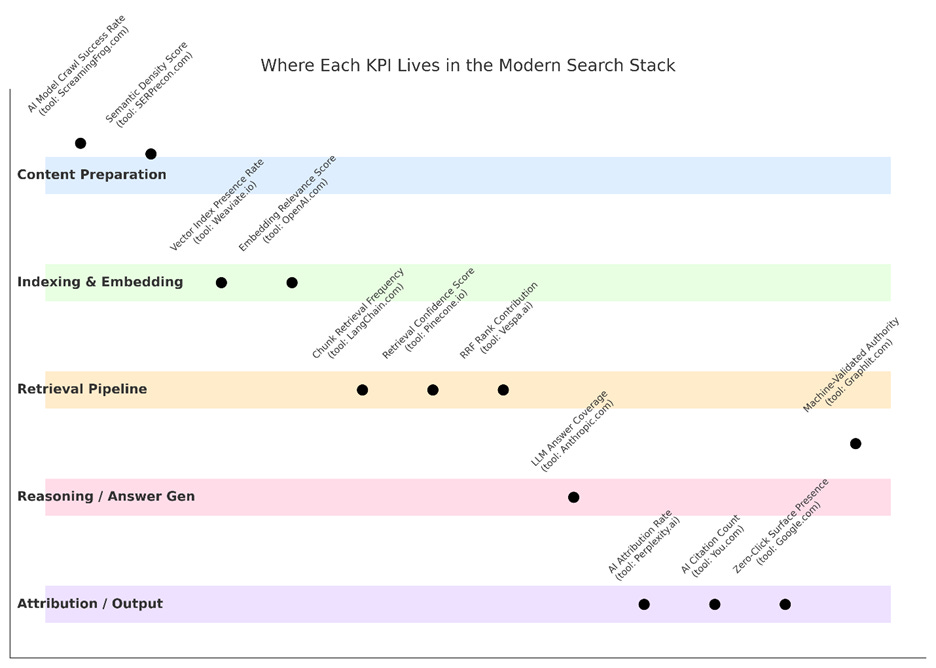

Where each KPI lives in the modern search stack

Traditional SEO metrics were built for the end of the line – what ranked, what was clicked. But in the generative AI era, performance isn’t measured solely by what appears in a search result. It’s determined across every layer of the AI search pipeline: how your content is crawled, how it’s chunked and embedded, whether it’s retrieved by a query vector, and if it’s ultimately cited or reasoned over in a machine-generated answer.

This second diagram maps each of the 12 emerging KPIs to its functional home within that new stack. From content prep and vector indexing to retrieval weight and AI attribution, it shows where the action is — and where your reporting needs to evolve. It also bridges back to my last tactical guide by anchoring those tactics in the structure they’re meant to influence. Think of this as your new dashboard blueprint.

Here’s an easy-access list for the domains in the chart above:

AI model crawl success rate

AI model crawl success rate

↳ Tool: Screamingfrog.co.uk

↳ Stack Layer: Content Preparation

Semantic density score

Semantic density score

↳ Tool: SERPrecon.com

↳ Stack Layer: Content Preparation

Vector index presence rate

Vector index presence rate

↳ Tool: Weaviate.io

↳ Stack Layer: Indexing & Embedding

Embedding relevance score

Embedding relevance score

↳ Tool: OpenAI.com

↳ Stack Layer: Indexing & Embedding

Chunk retrieval frequency

Chunk retrieval frequency

↳ Tool: LangChain.com

↳ Stack Layer: Retrieval Pipeline

Retrieval confidence score

Retrieval confidence score

↳ Tool: Pinecone.io

↳ Stack Layer: Retrieval Pipeline

RRF rank contribution

RRF rank contribution

↳ Tool: Vespa.ai

↳ Stack Layer: Retrieval Pipeline

LLM answer coverage

LLM answer coverage

↳ Tool: Anthropic.com

↳ Stack Layer: Reasoning / Answer Gen

AI attribution rate

AI attribution rate

↳ Tool: Perplexity.ai

↳ Stack Layer: Attribution / Output

AI citation count

AI citation count

↳ Tool: You.com

↳ Stack Layer: Attribution / Output

Zero-click surface presence

Zero-click surface presence

↳ Tool: Google.com

↳ Stack Layer: Attribution / Output

Machine-validated authority

Machine-validated authority

↳ Tool: Graphlit.com

↳ Stack Layer: Cross-layer (Answer Gen & Output)

A tactical guide to building the new dashboard

These KPIs won’t show up in GA4 – but forward-thinking teams are already finding ways to track them. Here’s how:

1. Log and analyze AI traffic separately from web sessions

Use server logs or CDNs like Cloudflare to identify GPTBot, Google-Extended, CCBot, etc.

Tools:

2. Use RAG tools or plugin frameworks to simulate and monitor chunk retrieval

Run tests in LangChain or LlamaIndex:

- LangChain: https://python.langchain.com/docs/concepts/tracing/

- LlamaIndex: https://docs.llamaindex.ai/en/stable/understanding/tracing_and_debugging/tracing_and_debugging/

3. Run embedding comparisons to understand semantic gaps

Try:

- https://openai-embeddings.streamlit.app

- https://docs.cohere.com/v2/docs/embeddings

- https://www.pinecone.io/learn/what-is-similarity-search/

- https://www.trychroma.com

4. Track brand mentions in tools like Perplexity, You.com, ChatGPT

5. Monitor your site’s crawlability by AI bots

Check robots.txt for GPTBot, CCBot and Google-Extended access.

- https://openai.com/gptbot

- https://developers.google.com/search/docs/crawling-indexing/robots-meta-tag

- https://commoncrawl.org/ccbot

6. Audit content for chunkability, entity clarity, and schema

Use semantic HTML, structure content, and apply markup:

You can’t optimize what you don’t measure

You don’t have to abandon every classic metric overnight, but if you’re still reporting on CTR while your customers are getting answers from AI systems that never show a link, your strategy is out of sync with the market.

We’re entering a new era of discovery – one shaped more by retrieval than ranking. The smartest marketers won’t just adapt to that reality.

They’ll measure it.

This article was originally published on Duane Forrester Decodes on substack (as 12 New KPIs for the GenAI Era: The Death of the Old SEO Dashboard) and is republished with permission.

#KPIs #generative #search #era