Social Signals

Technical SEO: Don’t rush the process

In an era where efficiency is key, many businesses question the time and resources spent on technical SEO audits.

However, cutting corners in this critical area can lead to incomplete insights and missed opportunities.

Let’s dive into why technical SEO deserves a firm investment in both human effort and time, starting with the often-overlooked challenge of crawl time.

Crawl time: The primary hindrance

Reducing human resource time in your SEO or digital marketing department by cutting technical SEO may be unwise.

Why?

The primary factor behind the time taken for audits is crawl time.

With today’s complex web architectures, this is inevitable.

Ecommerce websites, in particular, have rapidly expanding footprints with countless product and blog pages.

Each product often includes multiple images, increasing the number of on-site addresses exponentially.

Employers and clients frequently ask:

“Why do these audits take so long? Can’t you just focus on the top issues and save time?”

The answer is both “yes” and “no.”

While focusing on top issues can slightly reduce the time spent on commentary and data visualization, most of the time taken in technical SEO audits is crawl time.

The impact on overall audit accuracy remains negligible because the crawl itself – rather than data analysis – dominates the timeline.

While some argue crawl time is machine time and should not affect human effort, this is only partially true.

Dig deeper: 7 tips for delivering high-impact technical SEO audits

Platforms like Semrush or Ahrefs can streamline crawling if properly set up, monitored, and funded to handle all web properties continuously.

However, exporting, pivoting, and analyzing data still require significant manual effort.

Technical SEO experts can rarely rely on platform-generated reports without further refinement.

For instance, most SEO crawlers struggle with identifying true duplicate content.

Often, what is flagged as duplicate turns out to be parameter URLs, which Google ignores for indexing.

Similarly, failed canonical tag implementations can falsely appear as duplicate content.

Using tools like Screaming Frog adds another layer of complexity.

While highly cost-effective and powerful, it outputs raw spreadsheets requiring manual analysis. Its issues tab is rarely accurate without further data filtering.

As a client-side tool, Screaming Frog also requires the user’s machine to remain active during crawls.

If employees are using personal machines, they may be reluctant to leave them running overnight without proper compensation.

Additionally, the tool does not automatically adjust crawl rates, necessitating human supervision to avoid unintentional DDoS-like behavior.

While crawl time is primarily machine-driven, human oversight and intervention are often required.

Assuming that reducing crawl time will significantly shorten technical SEO audits can lead to inaccurate results and neglected insights.

Dig deeper: Top 6 technical SEO action items for 2025

Get the newsletter search marketers rely on.

HTML tag mutuality

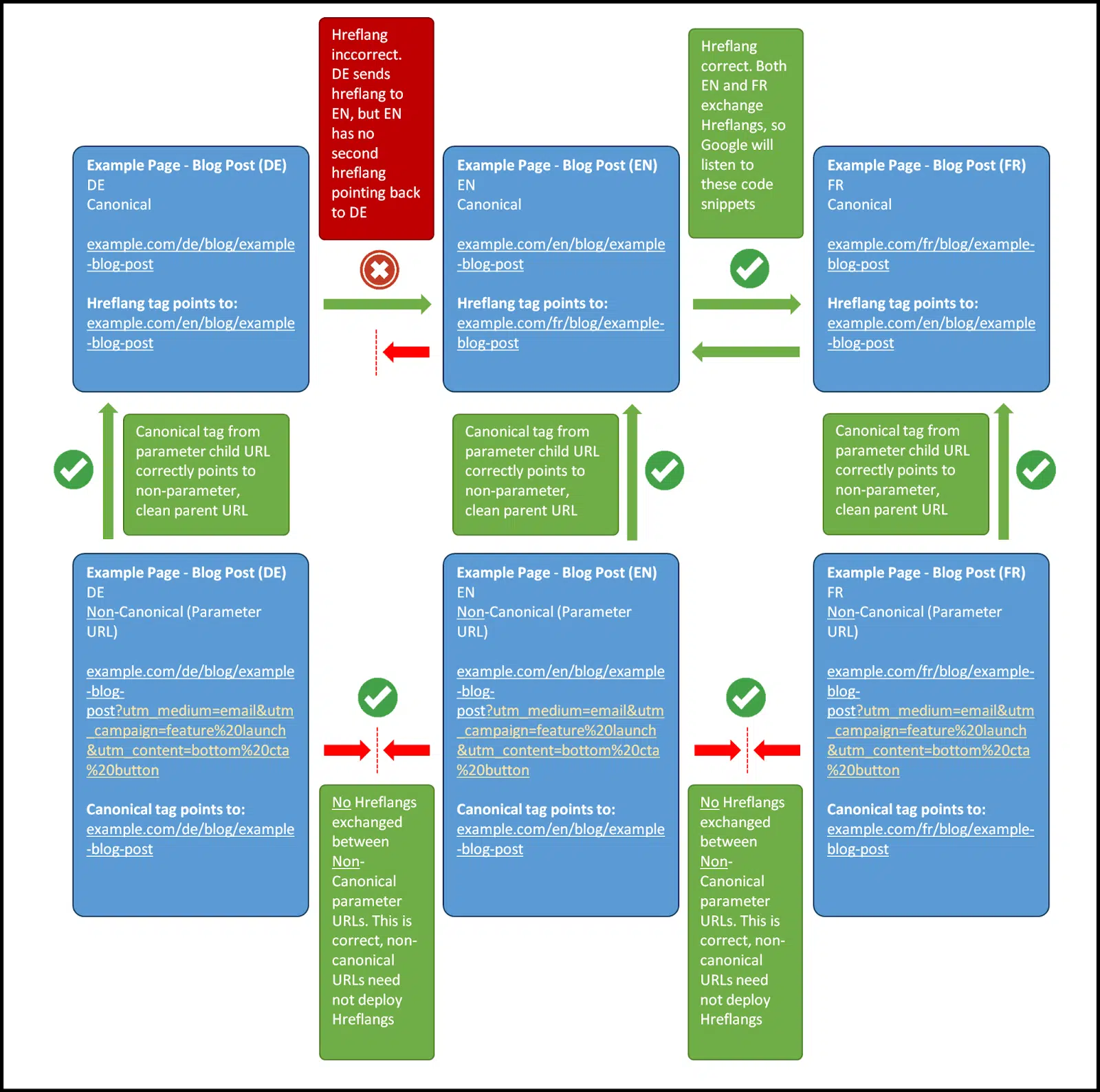

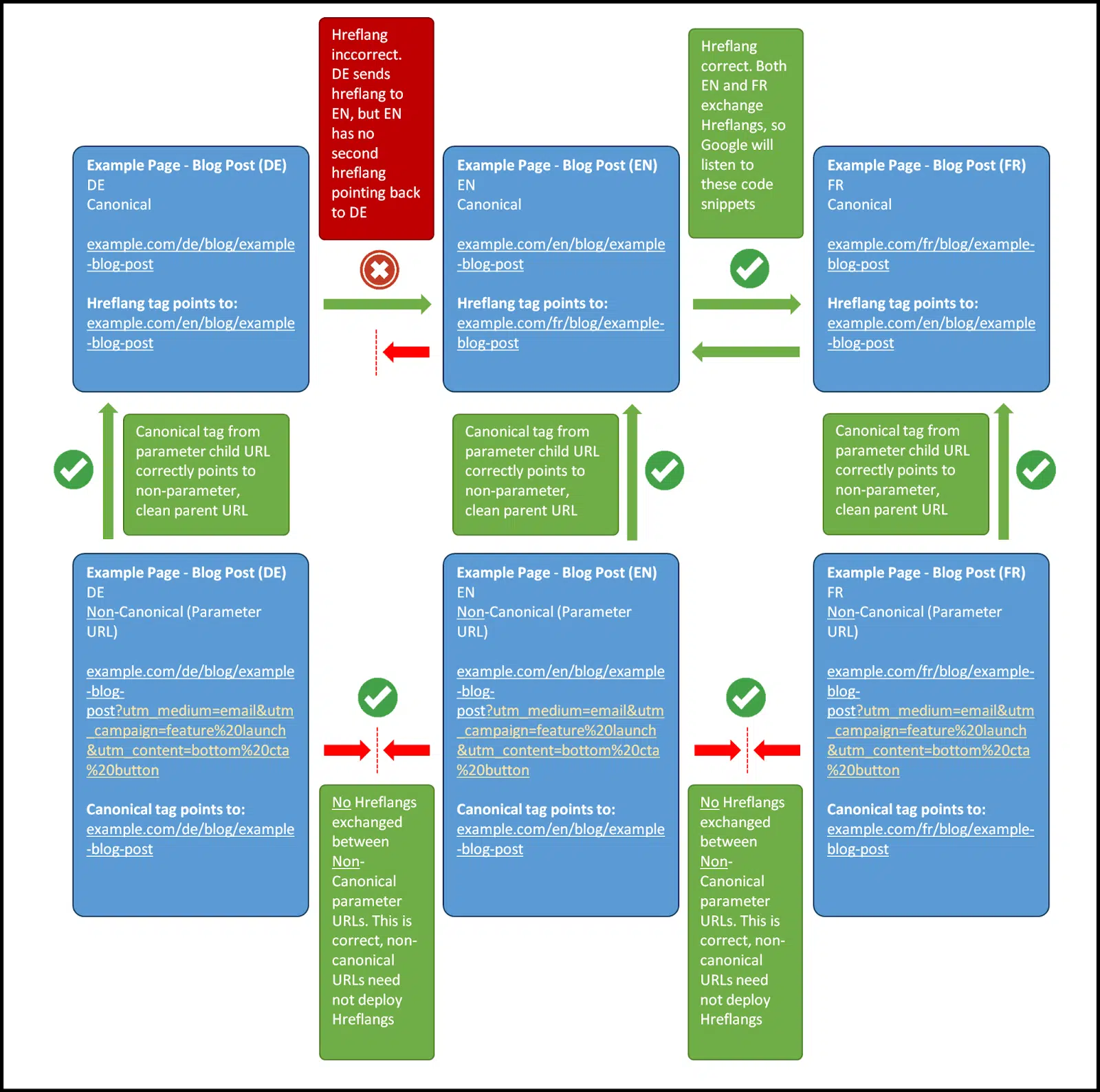

HTML tag mutuality, particularly with hreflang tags, demonstrates why reducing crawl time is inadvisable if you want accurate technical SEO insights.

As SEO has evolved, mutually dependent HTML tags, like hreflang tags, have become increasingly common.

Hreflang tags define relationships between pages in different languages and must always be reciprocal.

If one page links to another with a hreflang tag, but the destination URL does not return the same tag, the relationship is invalid and ignored by Google.

Even non-mutual tags, such as canonical tags, often reference external addresses that also need to be crawled.

Crawling only one section of a site (e.g., one language variant) leaves you unable to verify whether hreflang tags point back as required.

This can result in unflagged errors that are critical for site performance but remain undetected due to partial crawl data.

Similarly, canonical tags, though not requiring mutuality, can also pose challenges.

If a canonical tag points to a page outside your crawl sample, you cannot confirm whether it references a valid address.

Here is a diagram of how canonical tags and hreflang tags should interface:

These issues illustrate how incomplete crawl data can hinder a thorough technical SEO audit.

Partial data forces you to rely on assumptions rather than concrete evidence, making it unwise to reduce crawl time to expedite audits.

Dig deeper: 4 of the best technical SEO tools

Links and redirects

Producing accurate crawl data has required significant effort since the early days of the web, long before HTML tag mutuality became common.

Pages have always linked to others using the tag.

If your crawl sample includes links pointing to addresses outside of it, you cannot verify whether those links function correctly without crawling the destination pages.

Some cloud crawling platforms address this by checking the status codes of external or redirected pages without analyzing their full HTML.

While this can help in certain cases, it often defers deeper issues that remain unexamined.

Redirects present similar challenges.

If a page in your crawl sample points to a destination outside it, you cannot fully analyze the redirect chain.

This can lead to inaccurate redirect-shrinking recommendations, potentially causing significant problems for the site.

Dig deeper: How to prioritize technical SEO tasks

Be careful when reducing technical SEO time

There is no substitute for investing the necessary time in technical SEO.

While incomplete crawl samples or unattended crawls might seem like a way to reduce audit production time, they often create more issues than they solve.

Cutting corners can lead to overlooked problems, so it’s crucial to give your audits – and the experts conducting them – the time they require.

This doesn’t even account for the manual checks SEO professionals perform in addition to crawling, data handling, formatting, and analysis.

These combined efforts make it clear that the time spent on technical SEO is justified.

Avoid excessive pruning or shortcuts in this discipline.

If you must work with partial crawl data, ensure at least 70% crawl completion – 50% at an absolute minimum.

Anything less risks compromising the accuracy of your audit.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

#Technical #SEO #Dont #rush #process